Unlike previous years, this year was almost all work. I didn’t really have any free time (what little time I had was devoted to the fine art of inebriation, with many thanks to Motio for doing there part), so I couldn’t give a daily overview. On the flip side, I did have much greater access to the IBM developers this time, and had the chance to ask a few of my most urgent questions. This is actually the primary reason for me attending IBM Insight – the chance to look them in the eye and hopefully get some straight answers. There were a few people who dropped by the booth to try to chat, but apparently I was never there. Sorry! I have a tendency to wander around and collect pens/USB Sticks/tchotchkes for the kids. If it’s still relevant, drop me a line and we’ll chat.

I’m sure everyone is wondering about the new version of Cognos. The most important thing first. I do not need to change the name of my blog – IBM is sticking with the Cognos moniker. It is now christened Cognos Analytics, but for the purpose of abbreviations I’ll just call it C11.

The authoring environment has gone under a major re-factoring. The Report Studio we all know and love (well, I love it) has undergone a substantial face-lift. The Report Viewer has also undergone many changes. Previously an authored report was essentially static. You could, in theory, write JS to manipulate objects on the page but that always came with some level of risk. Dashboarding and datasets have also gone under the knife and have emerged substantially improved.

Unfortunately I don’t have access to the C11 demo yet. This any screenshots will be from Youtube videos. As soon as I do get access, I’ll try to publish some articles with original media.

Authoring

The immediate reaction is that it seems to be a completely new tool. The menu bar at the top is gone, clicking on certain regions opens up a circular context menu, and the toolbox is actually arranged in a logical format.

This is actually still using rsapp.htm. In theory, all of the same functionality is still there. The locations for the menu options have been moved, and this time they actually feel logical. To get to the report properties, for example, you don’t go through the File menu option – you actually click on the report object. Additional report properties have also been moved here, so it does make things a little easier to find. Moving things around does have its drawbacks – in the hands-on demo it took me a minute to find the location to switch from an individual page to a specific query. A few other features took me some time to find.

When a table or block is dragged in, a plus icon appears in the center. Clicking on that creates a radial context menu.

The items that appear in the menu are the items from the “pinned” section in the toolbox. Easily changed by report authors.

Another very positive change is the query explorer now shows all objects associated with that query. Expand a query, and jump to the object by clicking on it. The more complex reports tend to accumulate a lot of QueryNs, so this should speed cleaning up the reports.

Reports built in this new RS version will automatically open in the new Report Viewer (this is a property on the report level which can be changed). The new report viewer should work with the Prompt API (though the hands-on was getting an error when I tried), but all other JS is likely to cause issues. This should be okay, as a majority of the JS that I write has some similar functionality. The report viewer appears to be a modified Workspace Advanced. End users can make various simple modifications to the report output, resorting, basic calculations in lists or crosstabs. Users will only be able to save those changes if they have write access to the report, or if they save a report view in their folders. There are plans to extend the published API, but I’ve heard no specifics yet.

Reports upgrades to C11 will continue to run in the old report viewer! This means there is a very good chance I won’t get frantic calls to fix broken reports. As usual I received no promises – only “in theory” and “should”.

Some of my biggest requests, master/details on singletons (everyone should vote on it here: https://www.ibm.com/developerworks/rfe/execute?use_case=viewRfe&CR_ID=62883), and adding native functions to the dimensional function set (TM1 especially, it’s an IBM product! Why can’t I use the TM1 functions?! I should stop before this turns into a TM1 rant.) have not yet materialized.

Analysis Studio and Query Studio are still included in this version! While the direction is to remove it, eventually, there are a few gaps that couldn’t be overcome. Specifically with the getting the filters to show at the top. But this is the last version with AS and QS, for reals this time!

Datasets are very interesting.

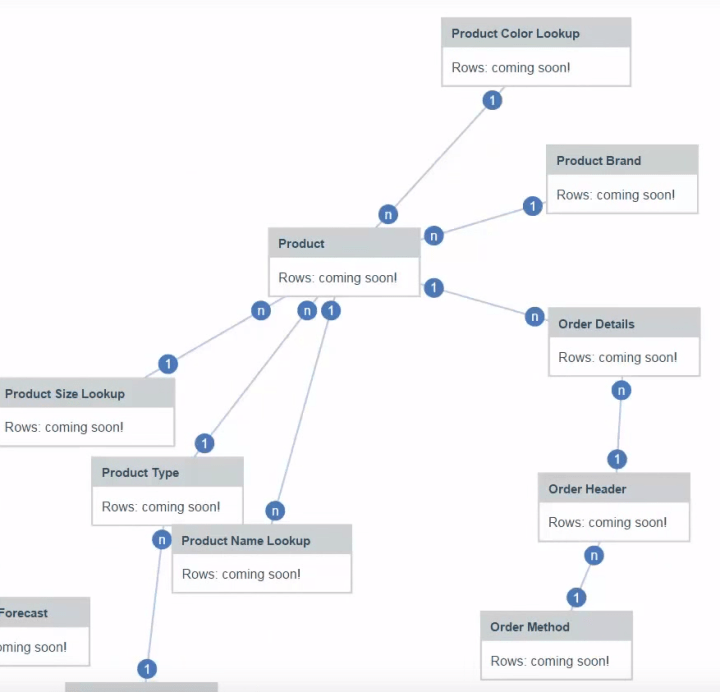

Unlike previous versions, you can create datasets on existing packages. Drag in the fields you want and Cognos will save the results in a local table. This will by, by default, on a DB2 instance installed automatically by Cognos. This is essentially a materialized view, but I don’t have any information on scheduling it. I am guessing that there will be governors administrators can set on this – number of rows, size of output, max runtime of query, and similar settings.

This is a completely web based tool, which is supposed to be simple enough for end users. In theory it should automatically determine how the joins should be built. See more here: https://www.youtube.com/watch?v=cYlbiWeBgtA

These datasets are designed to feed the new dashboarding system, which is truly impressive.

The dashboarding tool is obviously designed for analysts and end users. It does not offer the wide range of optoins and capabilities we’ve come to love in RS. However, it does many things that RS can’t do. The tool only works with the datasets, so the response time is fast – the data is already aggregated to the users needs and it’s automatically using DQM.

Drag in a measure, and it will create a block with that value. Drag an attribute on top of the measure, and it turns into a bar chart. Drag in another measure and it adds another bar, or add an attribute and it turns into a cluster or a scatterplot. We can turn this into another data container, like a list, with a simple right-click. Now for the interesting bit – drag in another measure onto the screen. The list just created will filter that measure. Just like the “Global Filters” from Workspace, you can filter any object by clicking on any item in that list. Furthermore, users can now drag in an iterator, similar to the item in Active Reports. But this one can automatically loop through members.

I can’t give it justice without an animated screenshot. Instead, just watch the video here: https://www.youtube.com/watch?v=bRbulHoUQC4

Navigating

The Cognos Connection screen is completely revamped. We won’t have the same system with tabs across the top and navigating folders. I’m personally sceptical of this, and nobody’s been able to give a satisfactory answer about where the tabs from C10 will actually go. This is actually my biggest concern when it comes to customer adoption.

The back end is mostly unchanged. Reports still use the same XML format, and the Cognos content store is (supposedly) still the same. This fact, coupled with the option to use the old Report Viewer, gives me hope that upgrading should be quick and painless.

On the non-Cognos-centric side, Watson is being pushed hard now. There’s even a way to import the results from Cognos reports into directly into the Watson web app. It seems to work through a CMS call through your browser. Lets say you have a list report. You provide the location for that report, answer all of the prompts, and your browser will run it in the background and send the results back to Watson. They warned that this can be very slow with large datasets. Stretch, walk around, get an expensive coffee from that little shop on the other side of town slow. Watson is now powering several different applications, some of whom were showcased throughout Insight. Research, shopping, travel, hospital, robots – there seems very little that Watson can’t do.

As usual, IBM Insight was a lot of fun and I strongly recommend attending. The networking possibilities alone are phenomenal, when coupled with inexpensive certification testing, and shiny toys (I got to fly a drone, and I saw a 3D printed car driven by a robot, and I got to play with a Van der Graaf generator, and probably a more interesting experiences I’m forgetting) make Insight a unique experience.

Addendum: My apologies for the lack of updates and replies to comments recently, my current client is taking all of my time. The current phase of the project is nearly over, so I’m going to soon have some time to go over my list.