One of the greatest strengths of Cognos has always been the ease in which data can be managed, queried and presented. With a well designed data model, the barrier to building accurate reports is very low. For all of the fancy interactive features, neat tricks, and convoluted JavaScript techniques, the actual process of building a simple report to important information is very easy.

Now what happens if you want to build a portal to take that information and present it to end users. With a tool as complex and as extensible as Cognos, there are many ways of exposing Cognos to the world. What follows is a few, but Important Note! I am not factoring in licensing in this article. Licensing costs can often choose your solution for you. Letting the public use report studio will probably cost more than saving a report to PDF and manually attaching it to the website, but it’s ultimately down to your license agreement.

Schedule report runs to save to a folder. This is a simple idea. You build a report, schedule it to save to a folder, and run a process to upload it to your website. I’ve seen complex examples where a process scans the folder for new reports every few seconds and dynamically updates the website when it finds the output, to simple examples where the output report is dumped into an FTP site for people to download at their leasure.

Some sites simply expose Cognos directly to the internet granting visitors anonymous access. A simple google search will find you many examples of this. One example is The Office of Personel Management (https://www.fedscope.opm.gov/). Click on a data cube and you’ll find yourself using PowerPlay studio. No actual predefined reports to speak of here, but people who use it are probably more interested in using the slice and dice capabilities provided. The benefit here is obvious. It’s Cognos, pure and simple. All of the benefits, and drawbacks, of using Cognos are here.

iFrames. You might be a government organization presenting offering reports through a complicated series of generated iFrames, such as found at the New Hampshire Department of Health and Human Services. Whan interactive dashboard is run (such as https://wisdom.dhhs.nh.gov/wisdom/#Topic_00FD0704951145F793A8C5424D352FBF_Anon), it loads several widgets talking to predefined reports. Each widget contains parameter information for Cognos to run and return the report, specifically for that widget. You could have one widget showing hospitalizations by day as a line graph, and another one showing fatalities by month; with both widgets pointing to the same report. This is a brilliant and extensible solution. It allows the dashboard developer to use the same reports multiple times. As each widget is an iFrame, the user has all of the capabilties in native Cognos. Drillthoughs, prompting, and any other feature report authors could put in. The drawback of this technique is that each widget does run a separate report, with all the overhead associated with it. If you anticipate a large audience, with thousands of hits an hour, this will cause system stability issues.

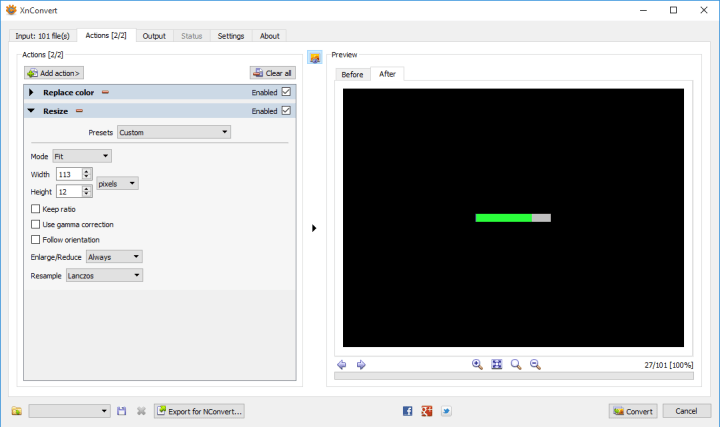

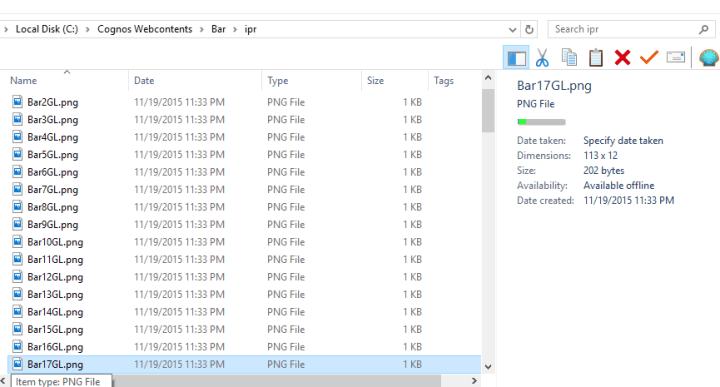

Mashup Services. I’ve been using Cognos Mashup Services (CMS) quite a bit lately. It’s an extension of the SDK that simplifies exposing Cognos reports as an API. There are many ways to use CMS to build your portal. A simple REST call and your pixel perfect reports, with graphs and formatting, can be returned in fully formed HTML and embedded directly in your webpage. The issue here is that all native Cognos interactivity is gone. No prompts, no drill downs. Any interactive features have to be built by default. But this may not necessarily be a bad thing. The Cognos outputs, while it can be coerced into looking nice, do not live up to modern web design standards. Features like sticky headers when scrolling down, or client side table sorting, or more info drawers or popovers, are difficult to build. By using CMS to generate your data in a compressed format, like JSON, you can merge the powerful querying engine with modern web design. Of course this is entirely predicated on you having web developers on your staff, or an application already set up to work with Cognos data. And speaking of which, I’ll revisit this one in the future.

So to summarize.

Scheduling a report – Simple, non-interactive, low cost. You need someone to set up the automation to get the output into the webpage, and then it’s fire and forget.

iFrames – Build the reports as normal, and use URL parameters to load it. As long as you have a report developer, this is the easiest solution. Some overhead on the server when running reports.

Mashup – medium to difficult. Any interactivity on the page needs to be coded in. Expertise will be needed to embed the output into the report. If you’re pulling HTML you can expect some unreasonably large results. Datset outputs, like JSON or ATOM, will need to have additional post processing.